I will show you how to improve your Golang application performance by building Redis from scratch. I use Redis as the case study since it’s well-known, fairly easy to implement, and quite challenging to make it perform better. I did a live streaming to demonstrate all of this a while back, but it was in Bahasa. This blog post aims to make the content easier to consume and reach a wider audience by presenting it in a written format and in English. All the code presented here is available, and feel free to ask questions by opening an issue: goredis.

Note that this article is just an introduction, and I have made some simplifications to make it more consumable. There are some important aspects that I have ignored, such as graceful shutdown, handling lost connections, and more realistic benchmarks. Running it on different machines will also affect the results. I wrote this article with more emphasis on the experience of improving Golang’s performance rather than mimicking what typically happens in a real production environment. However, I hope you can still benefit from the techniques presented in this article.

Redis

Redis is an in-memory key-value database. A key-value database is very simple.

At its core, there are two kinds of queries: SET and GET. You can SET a

key to a certain value, and you can GET a key to return its value. Of course,

in practice, we need more capabilities than just SET and GET, such as

delete, timeout, scan, etc. But for simplicity, I’m going to focus on GET and

SET. Redis is a very popular key-value database, and its protocol is very

simple.

Redis has a reputation for being very fast because it stores all of its data in main memory. While accessing main memory is indeed fast, it’s also quite expensive, which is why we often use Redis as a cache, storing only the hot data for fast access while using other databases like MySQL or PostgreSQL to store the actual data. Apart from that, Redis also has other use cases, such as queuing systems and cardinality counting.

To interact with Redis, we can use

commands. Redis provides a bunch of

commands, but we will only focus on

GET and

SET. We will implement these two

commands and improve their performance. If you look at the SET command

documentation, there are actually

quite a lot of options available. But for simplicity, we won’t implement all of

those options and will only focus on using SET to insert a key-value pair.

To get Redis, you can clone the Redis repository and build it from source using

make:

1git clone https://github.com/redis/redis.git --depth=1 --branch 7.4.2

2cd redis

3make -j 24

Once successful, you will get a bunch of executables. We will focus on

redis-server and redis-benchmark. These binaries are located in the ./src

folder after you successfully build Redis. You can use redis-server to run the

Redis server and redis-benchmark to benchmark your Redis server.

You can start the Redis server by just running the redis-server executable. To

interact with the Redis server, you can use redis-cli, which is also compiled

when you run make.

1# you can specify the port using --port flag

2❯ ./src/redis-server --port 6380

3...

41038846:M 23 Jan 2025 21:35:57.171 * Server initialized

51038846:M 23 Jan 2025 21:35:57.172 * Ready to accept connections tcp

Then you can use redis-cli to interact with the Redis server:

1❯ ./src/redis-cli -p 6380 SET the_key_1 "hello world"

2OK

3❯ ./src/redis-cli -p 6380 GET the_key_1

4"hello world"

Now, this is just one way to interact with Redis. There are a bunch of other ways as well. You can interact with Redis from Go using a library like go-redis. There are also graphical user interfaces to interact with Redis. It’s just like other databases such as MySQL and PostgreSQL; you can use the CLI, call it from Go, or open it using tools like DBeaver.

Redis Protocol

All the libraries and tools that can interact with Redis follow the same

protocol specified by Redis called

RESP.

Fortunately, they have done a good job writing the details of

RESP in their

documentation. It’s actually a very simple protocol, but we won’t go through all

of its specifications. We will only implement the protocol enough so that we can

serve SET and GET commands.

RESP is quite simple. A Redis client connects to Redis server through TCP. Once

connected, the client can send a command to the server and the server will reply

with the response of that command. For commands like GET, the response might

be the value you are trying to get from Redis. For commands like SET, the

response is just a string "OK" if it succeeds. The command and response itself

can be depicted as ordinary data structures like strings, integers, and arrays.

When you run GET the_key, basically your request is just an array of strings,

where the first element is "GET" and the second element is "the_key". The

same goes for the response, it’s just an ordinary data structure. In the case of

GET, the response might either be a string containing the value fetched by the

user, or NULL if the key doesn’t exist.

In the RESP documentation, it is explained how to represent each data type. There are a bunch of data types supported by Redis, but we will only care about 3 types: bulk strings, arrays, and nulls.

- Bulk string will be represented like this:

$<length>\r\n<data>\r\n - Simple string will be represented like this:

+<the_string>\r\n - Arrays will be represented like this:

*<number-of-elements>\r\n<element-1>...<element-n>. - Nulls will be represented by

_\r\n.

The graph below will demonstrate what happens in the network when you interact

with Redis server through the redis-cli.

One interesting property of RESP is that its encoding was designed to be human readable. So, if you want to print their communication protocol, you can actually read it as plain text without additional tools.

Implementing Basic TCP Server

Let’s start simple by implementing a basic echo-tcp-server in Go. The implementation is available in this commit. An echo server is just a server that receives a message from a client and sends back the exact same message to the client.

I like to represent the whole redis server as a struct called server. This

struct will contain all the states we need to be a redis server, including the

list of connected clients and the key-value data itself. I like to separate this

from the main package because I want to be able to use this struct as a library.

It is quite useful to do that because we can test it without actually running

the program.

1type server struct {

2 listener net.Listener

3 logger *slog.Logger

4 started atomic.Bool

5}

6

7func NewServer(listener net.Listener, logger *slog.Logger) *server {

8 return &server{

9 listener: listener,

10 logger: logger,

11 started: atomic.Bool{},

12 }

13}

The server will have Start and Stop methods to start and stop the server.

Starting the server is pretty straightforward; we just need to start accepting

TCP connections from the clients by calling Accept. Calling Accept blocks

the current goroutine until there is a client requesting to connect. Once

connected, we will handle the client in a separate goroutine. You almost always

want to handle one client from one separate goroutine because most of the

operations you do will block your goroutine. Reading the client request or

sending response blocks the current goroutine. Your goroutine can’t do anything

else while blocked. That’s why you need one goroutine that accepts new clients,

and separate goroutines to handle those clients. The method handleConn will

handle a single connected TCP client. Because we want to run it on a separate

goroutine, we write go s.handleCon(conn).

1func (s *server) Start() error {

2 if !s.started.CompareAndSwap(false, true) {

3 return fmt.Errorf("server already started")

4 }

5 s.logger.Info("server started")

6

7 for {

8 conn, err := s.listener.Accept()

9 if err != nil {

10 // TODO: handle shutdown.

11 // at this point, the server might be shutting down.

12 // or there is a network problem that is causing the

13 // server to be unable to accept new connections.

14 // If it's the former, we just need to do some cleanup.

15 // If it's the latter, we just need to return the error,

16 // nothing we can do anyway.

17 break

18 }

19 go s.handleConn(conn)

20 }

21 return nil

22}

To handle a single client, we just need to reserve a buffer to hold the message

from the client and send that exact same buffer back to the client. If the

client closes the connection, calling Read will return 0, and we can use

this to detect if the client is disconnected.

Note that this is a very simplified version. There could be a case where the client is lost due to network failure or misconfiguration. In this case, the client can’t tell the server that they’re disconnected. From the server’s point of view, the client just stalls there and doesn’t send anything. To avoid this problem, you need to go further and implement some kind of timeout to close the connection if the client doesn’t send anything for a long time.

1func (s *server) handleConn(conn net.Conn) {

2 for {

3 buff := make([]byte, 4096)

4 n, err := conn.Read(buff)

5 if err != nil {

6 // TODO: handle the error

7 break

8 }

9 if n == 0 {

10 // TODO: this means the client is closing the connection

11 break

12 }

13 if _, err := conn.Write(buff[:n]); err != nil {

14 // TODO: handle the error

15 break

16 }

17 }

18}

Stopping the server is quite straightforward; we just need to call Close on

the TCP listener to stop listening. Although, this is not the most ideal way

because there might be some ongoing connections that are currently processing

the user request. When you stop the server like this, those connections might be

disconnected and the client will receive an error. This is not OK in a

production environment because this means whenever you restart this redis

server, there might be a lot of requests failing.

1func (s *server) Stop() error {

2 if err := s.listener.Close(); err != nil {

3 s.logger.Error(

4 "cannot stop listener",

5 slog.String("err", err.Error()),

6 )

7 }

8

9 // Notice that we haven't closed all remaining active connections here.

10 // In Linux and in this particular case, it's technically not necessary

11 // because closing the listener will also close all remaining connections.

12 // But, in some cases, we might need some kind of graceful shutdown logic

13 // when closing the client.

14

15 return nil

16}

Now, let’s think about a better shutdown mechanism. To shutdown a server, we can just stop everything and exit. The operating system will handle all the cleaning up of resources we have like open sockets, files, allocated memory, etc. The thing is, this might not be the ideal way to shutdown, especially when you are running a service like Redis. The TCP connection in your process might be in the middle of a request. Maybe your client sent you a command and you exit the program before the response is sent to the client. When this happens, your client won’t receive the response and will consider it as an error. It would be better to have a mechanism to wait until all in-flight requests are finished while at the same time stopping new requests. Think of it like a “last order” in a restaurant. When a restaurant is closing, they usually make a last order, where people can no longer order food, but the people that have already ordered will still be able to wait for the food and eat there. This is called graceful shutdown, and it’s a common thing to implement when building a service like Redis.

You might wonder: wait, if I shutdown my server, those clients won’t be able to connect to my server anyway. What’s the point of doing all of those works if in the end, those clients will get an error because the server itself is gone? Well, usually, when you shutdown a server, it is because you want to migrate it to a new version or to a new machine with more capacity. To do this, you can run two servers concurrently and shutdown the old one after that. Because of this, we still need to shutdown gracefully so that the client can move to the new server with zero downtime.

Some other kinds of services such as relational databases might have their own shutdown mechanisms. For example, databases like MySQL typically have a log that records all the operations that happened on the database like when a row is added, deleted, and updated. When the database is restarted, it will replay all the operations recorded in the log to get to the same state as before. Doing this is time-consuming when the log is very large. To reduce the amount of replay needed, they usually perform a snapshot of their data periodically. By doing this, they only need to replay the operations that have been done after the last snapshot. If you are building a service like this, you may want to run the snapshot mechanism when you shutdown the service. By doing that, when you start your service again, it will start instantly because there are no operations that need to be replayed.

I won’t implement those graceful shutdown mechanisms here for simplicity reasons. Just be aware that in real systems, this is something that you should think carefully about.

The next step is to write the main function and run our server. The main

function is pretty simple; we just need to prepare the logger and listener for

our server. I will use Golang’s slog package for the logger and I will listen

to port 3100 for the Redis server. There is no particular reason why I use 3100;

it’s just a number that I chose. Once the server is created, I will run it on a

separate goroutine while the main goroutine will be waiting for a signal to

terminate the server. Once the user sends a termination signal, we will call

server.Stop to stop the server.

1func main() {

2 logger := slog.New(slog.NewTextHandler(os.Stderr, &slog.HandlerOptions{Level: slog.LevelDebug}))

3 address := "0.0.0.0:3100"

4 logger.Info("starting server", slog.String("address", address))

5 listener, err := net.Listen("tcp", address)

6 if err != nil {

7 logger.Error(

8 "cannot start tcp server",

9 slog.String("address", address),

10 slog.String("err", err.Error()),

11 )

12 os.Exit(-1)

13 }

14 server := goredis.NewServer(listener, logger)

15

16 go func() {

17 if err := server.Start(); err != nil {

18 logger.Error("server error", slog.String("err", err.Error()))

19 os.Exit(1)

20 }

21 }()

22

23 c := make(chan os.Signal, 1)

24 signal.Notify(c, os.Interrupt)

25 <-c

26

27 if err := server.Stop(); err != nil {

28 logger.Error("cannot stop server", slog.String("err", err.Error()))

29 os.Exit(1)

30 }

31}

Up to this point, we already have a TCP echo server. We can test it by running our program and connecting to it. You can check the full source code on this commit.

1❯ go run ./goredis/main.go

2time=2025-02-03T03:31:03.218+07:00 level=INFO msg="starting server" address=0.0.0.0:3100

3time=2025-02-03T03:31:03.218+07:00 level=INFO msg="server started"

Once you’ve started the server, you can connect to it. To connect to our server,

we can use nc. You can run nc localhost 3100 in your terminal and start

typing what you want to send to the server there. After you press enter, the

message you typed will be sent to the server and the server will send back the

exact same message. You can type Ctrl+C once you’re done. Pressing Ctrl+C

will send an interrupt to nc and nc will treat it as a termination signal

and exit the process.

1❯ nc localhost 3100

2Hi, there

3Hi, there

4How are you?

5How are you?

Implement RESP

Now we have a working TCP server, let’s start serving Redis requests. As

described by RESP documentation, a Redis command is just an array of strings

encoded using the RESP specification. Let’s write

a function

that takes bytes and parses those bytes into a Go data structure. So, an encoded

string becomes Go’s string and an encoded array becomes Go’s slice.

Before that, I want to point out that instead of taking bytes and returning a Go

data type, we may want to take an io.Reader instead. By taking io.Reader, we

can work with more types instead of just bytes. If you look at the io.Reader

interface, it’s basically just an interface to read a bunch of bytes. If you

want to pass bytes directly, you can still use bytes.Buffer. In our case, when

we accept a client connection, we will get a

net.Conn that also implements io.Reader.

Accepting io.Reader will make it easier for us to work with multiple data

sources. This can be handy for testing. In our real service, the io.Reader is

backed by net.Conn. But, if we want to test our implementation, we can just

pass bytes.Buffer to it and it will work. We don’t have to make a real TCP

connection just to test our RESP parser.

Ok, first, let’s implement a helper function to read a single byte from an

io.Reader.

1func readOne(r io.Reader) (byte, error) {

2 buff := [1]byte{}

3 n, err := r.Read(buff[:])

4 if err != nil {

5 return 0, err

6 }

7 // n represents the number of bytes read when we call `r.Read`.

8 // since `buff`'s length is one, we should read one byte. Otherwise,

9 // the client must've been disconnected.

10 if n != 1 {

11 return 0, io.EOF

12 }

13 return buff[0], nil

14}

Parsing the RESP data type is quite simple. The first byte of the stream

basically gives us information about what data type we should read next. If it’s

a *, then you should parse an array. If it’s a $, then you should parse a

string. There are other data types such as integers, booleans, maps, etc., but

we won’t be focusing on those.

1func readObject(r io.Reader) (any, error) {

2 b, err := readOne(r)

3 if err != nil {

4 return nil, err

5 }

6 switch b {

7 case '*':

8 return readArray(r, false)

9 case '$':

10 return readBulkString(r)

11 default:

12 return nil, fmt.Errorf("unrecognized character %c", b)

13 }

14}

Next, we just need to parse it as described by the standard. If it’s a string, we just need to parse its length and read that many bytes.

1func readBulkString(r io.Reader) (string, error) {

2 size, err := readLength(r)

3 if err != nil {

4 return "", err

5 }

6 buff := make([]byte, size)

7 if _, err := io.ReadFull(r, buff); err != nil {

8 return "", err

9 }

10 b, err := readOne(r)

11 if err != nil {

12 return "", err

13 }

14 if b != '\r' {

15 return "", fmt.Errorf("expected carriage-return character for array, but found %c", b)

16 }

17 b, err = readOne(r)

18 if err != nil {

19 return "", err

20 }

21 if b != '\n' {

22 return "", fmt.Errorf("expected newline character for array, but found %c", b)

23 }

24 return string(buff), nil

25}

26

27func readLength(r io.Reader) (int, error) {

28 result := 0

29 for {

30 b, err := readOne(r)

31 if err != nil {

32 return 0, err

33 }

34 if b == '\r' {

35 break

36 }

37 result = result*10 + int(b-'0')

38 }

39 b, err := readOne(r)

40 if err != nil {

41 return 0, err

42 }

43 if b != '\n' {

44 return 0, fmt.Errorf("expected newline character for length, but found %c", b)

45 }

46 return result, nil

47}

If it’s an array, we just need to read its length and recursively parse the array items as many times as the length of the array.

1func readArray(r io.Reader, readFirstChar bool) ([]any, error) {

2 if readFirstChar {

3 b, err := readOne(r)

4 if err != nil {

5 return nil, err

6 }

7 if b != '*' {

8 return nil, fmt.Errorf("expected * character for array, but found %c", b)

9 }

10 }

11 n, err := readLength(r)

12 if err != nil {

13 return nil, err

14 }

15 result := make([]any, n)

16 for i := 0; i < n; i++ {

17 val, err := readObject(r)

18 if err != nil {

19 return nil, err

20 }

21 result[i] = val

22 }

23 return result, nil

24}

We also need a way to encode our response in RESP format. However, encoding the

response is way easier than parsing it. We could just use fmt.Sprintf or

fmt.Fprintf to do that easily. For example, if you want to encode a string,

just write fmt.Sprintf("$%d\r\n%s\r\n", len(theString), theString). Because of

that, we don’t need a helper to do this.

Implement SET and GET

Now that we already know how to parse RESP messages, we can use them to read

user’s commands and handle them. Instead of just echoing whatever the client

sends to our server, now we will parse it and handle it. We can use the

readArray helper function above to read the command from the user, take its

first element as the command name, and handle them based on the command.

Check out this commit for the full code.

1func (s *server) handleConn(clientId int64, conn net.Conn) {

2 ...

3 for {

4 request, err := readArray(conn, true)

5 ...

6 commandName, ok := request[0].(string)

7 ...

8 switch strings.ToUpper(commandName) {

9 case "GET":

10 s.logger.Debug("handle get command", slog.Int64("clientId", clientId))

11 case "SET":

12 s.logger.Debug("handle set command", slog.Int64("clientId", clientId))

13 default:

14 s.logger.Error("unknown command", slog.String("command", commandName), slog.Int64("clientId", clientId))

15 }

16 ...

17 conn.Write([]byte("+OK\r\n"))

18 }

19 ...

20}

As you can see, we just need to read the client message, parse it using

readArray, take the first element and treat it as the command name. In the

code above, we just log the command; next, we will write a function for each

command we want to handle. In this case, we have a function that handles the

SET command and a function that handles the GET command.

1func (s *server) handleConn(clientId int64, conn net.Conn) {

2 ...

3 switch strings.ToUpper(commandName) {

4 case "GET":

5 err = s.handleGetCommand(clientId, conn, request)

6 case "SET":

7 err = s.handleSetCommand(clientId, conn, request)

8 }

9 ...

10}

11

12func (s *server) handleGetCommand(clientId int64, conn net.Conn, command []any) error {

13 if len(command) < 2 {

14 _, err := conn.Write([]byte("-ERR missing key\r\n"))

15 return err

16 }

17 key, ok := command[1].(string)

18 if !ok {

19 _, err := conn.Write([]byte("-ERR key is not a string\r\n"))

20 return err

21 }

22 s.logger.Debug("GET key", slog.String("key", key), slog.Int64("clientId", clientId))

23 // TODO: the GET logic goes here

24 _, err := conn.Write([]byte("_\r\n"))

25 return err

26}

27

28func (s *server) handleSetCommand(clientId int64, conn net.Conn, command []any) error {

29 if len(command) < 3 {

30 _, err := conn.Write([]byte("-ERR missing key and value\r\n"))

31 return err

32 }

33 key, ok := command[1].(string)

34 if !ok {

35 _, err := conn.Write([]byte("-ERR key is not a string\r\n"))

36 return err

37 }

38 value, ok := command[2].(string)

39 if !ok {

40 _, err := conn.Write([]byte("-ERR value is not a string\r\n"))

41 return err

42 }

43 s.logger.Debug(

44 "SET key into value",

45 slog.String("key", key),

46 slog.String("value", value),

47 slog.Int64("clientId", clientId),

48 )

49 // TODO: the SET logic goes here

50 _, err := conn.Write([]byte("+OK\r\n"))

51 return err

52}

There is nothing magical in the above code. We just write functions to handle

each command and write a few lines of code to fetch its parameters. In the case

of the GET command, we want to fetch the key, and in the case of the SET

command, we want to fetch the key and value. We just return OK for the SET

command and (nil) for the GET command. Next, we will implement the actual

logic to handle SET and GET.

You can think of Redis as a map[string]string. SET and GET commands are

basically just commands to interact with that map. Running SET user1 Andy is

basically just saying m["user1"] = "Andy". And running GET user1 is

basically just like m["user1"]. So, let’s just do that: create a map in the

server struct and handle SET by inserting to that map, and handle GET by

fetching a key from that map. The problem is, you need to make sure that there

are no conflicting operations happening concurrently. You can’t have two

goroutines accessing the same map when one of them is a write operation. This is

what we call a race condition.

It’s a condition where you have two threads that concurrently access the same

memory region and their operations are conflicting. When this happens, you might

end up with corrupted data structures. In Go, the runtime might check this

condition and throw a panic if that happens.

Race Condition: to understand this concept, imagine having a stack of integers. You can push to the stack like this:

1type stack struct { 2 data []int 3 top int 4} 5 6func (s *stack) Push(value int) { 7 s.data[s.top] = value 8 s.top += 1 9}It looks very simple and perfect, but it’s actually not thread-safe and can have problems if you call

Pushfrom two concurrent goroutines. Imagine you have goroutine A and goroutine B. The stack was empty and both of them are callingPushat the same time. It just happens that both goroutine A and B execute line 7 at the same time. As a result, both of them sets.data[0]. Now, goroutine A might executes.top += 1first and then be followed by goroutine B, or the other way around. As a result, once both goroutines finish, the stack’stopfield is incremented twice, yets.data[1]is never filled ands.data[0]is filled twice. This is an example of a race condition. Because there are two conflicting goroutines touching the same memory region, they might corrupt each other.

To avoid race conditions, we can use sync.Mutex or sync.RWMutex. The way we

avoid race conditions is by grabbing a lock before executing critical sections.

We can write our code such that every time we access the map, a lock has to be

acquired first. After we finish accessing the map, we can release the lock.

Other goroutines that might want to access the map will also need to acquire the

lock first. If a lock is already acquired by another goroutine, acquiring the

lock will block the goroutine until the one that holds the lock releases it. To

acquire a lock, you can call the Lock method on sync.Mutex, and you can use

the Unlock method to release the lock.

Now, if you think about it, when two or more goroutines are reading the same

memory region, it shouldn’t be a problem because they’re just reading it. The

race condition will only happen when those goroutines are conflicting. Reading

is not conflicting with another reading. You will only conflict when one of the

operations is a write operation. Because of that, instead of having a simple

locking mechanism, you want the lock to be smart enough so that if the goroutine

that holds the lock only uses it to read data from your map, another goroutine

can also acquire the lock if it’s just for reading the data. This is where

sync.RWMutex can help you. Now, you have 4 methods: Lock, Unlock, RLock,

RUnlock. Lock and Unlock behave similarly to the sync.Mutex. You can use

RLock to acquire the lock for reading the map. When you hold the lock for

reading, another goroutine that calls RLock won’t be blocked.

1type server struct {

2 ...

3 dbLock sync.RWMutex

4 database map[string]string

5}

6

7func (s *server) handleGetCommand(clientId int64, conn net.Conn, command []any) error {

8 ...

9 s.dbLock.RLock()

10 value, ok := s.database[key]

11 s.dbLock.RUnlock()

12 ...

13

14 var err error

15 if ok {

16 resp := fmt.Sprintf("$%d\r\n%s\r\n", len(value), value)

17 _, err = conn.Write([]byte(resp))

18 } else {

19 _, err = conn.Write([]byte("_\r\n"))

20 }

21 return err

22}

23

24func (s *server) handleSetCommand(clientId int64, conn net.Conn, command []any) error {

25 ...

26 s.dbLock.Lock()

27 s.database[key] = value

28 s.dbLock.Unlock()

29 ...

30}

You can find the code in this commit.

Testing And Benchmark

Congratulations, we now have a very simple Redis server. We can start our Redis

server by running go run ./goredis/main.go.

1❯ go run ./goredis/main.go

2time=2025-01-31T22:23:54.458+07:00 level=INFO msg="starting server" address=0.0.0.0:3100

3time=2025-01-31T22:23:54.458+07:00 level=INFO msg="server started"

4...

We can now run commands from redis-cli:

1❯ ./redis-cli -h localhost -p 3100 SET key1 value1

2OK

3❯ ./redis-cli -h localhost -p 3100 GET key1

4"value1"

5❯ ./redis-cli -h localhost -p 3100 GET key2

6(nil)

As you can see, our Redis server works nicely.

Ideally, you want to test your implementation very often. Every time you make a change, you need to make sure that you don’t break the Redis server. To be sure, you need to test it every time you make changes. Testing manually like this is tedious, boring, and prone to error. To make things easier and more reliable, you can write an automated test. Then, you can run your automated test every time you make a change and make sure that the tests don’t break. You can check this commit, this commit, and this commit to see how I wrote the automated tests.

Now, let’s try to see the performance of our server. We are going to benchmark

the server using the redis-benchmark tool that we’ve compiled before.

1❯ ./redis-benchmark -h localhost -p 3100 -r 100000000000 -c 50 -t SET,GET -q

2WARNING: Could not fetch server CONFIG

3SET: 104602.52 requests per second, p50=0.263 msec

4GET: 110132.16 requests per second, p50=0.247 msec

5...

In the command above, we run the redis benchmark using 100 billion possible keys

and using 50 concurrent connections. After you run that command, your server

will be hit by 100,000 requests, and the result is reported. If you see the

result above, our server can handle roughly 104K SET operations per second and

110K GET operations per second. It also reports that the latency of SET

requests has a median of 0.2ms and the latency of GET requests has a median of

0.2ms. You might get different results depending on the machine you’re running.

Note that this number might not be very useful in the real world. In the real world, the access pattern is usually not uniform like this. Some keys are accessed more often than other keys, for example. The timing is also rarely this regular. We also rarely use median to measure the latency. Instead, the 95th percentile is more representative. Having a median of 0.26ms means half of your

SETrequests are served below 0.2ms. But half of the requests is a very small number. What people usually want is to have good latency on 95%, 99%, or even 100% of the requests. But again, we are going to stick with this benchmark mechanism for simplicity’s sake. If you want to do a proper benchmark, you should think about your production environment and try to mimic its access pattern as closely as possible.

Looking at the benchmark result, it’s hard to know whether our Redis server has

good performance. In order to evaluate this number, we can compare it to the

actual Redis implementation. First, let’s start the redis-server.

1❯ ./redis-server --port 3200

2182161:C 31 Jan 2025 22:50:45.805 * oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

3182161:C 31 Jan 2025 22:50:45.805 * Redis version=7.4.2, bits=64, commit=a0a6f23d, modified=0, pid=182161, just started

4182161:C 31 Jan 2025 22:50:45.805 * Configuration loaded

5182161:M 31 Jan 2025 22:50:45.805 * monotonic clock: POSIX clock_gettime

6 _._

7 _.-``__ ''-._

8 _.-`` `. `_. ''-._ Redis Community Edition

9 .-`` .-```. ```\/ _.,_ ''-._ 7.4.2 (a0a6f23d/0) 64 bit

10 ( ' , .-` | `, ) Running in standalone mode

11 |`-._`-...-` __...-.``-._|'` _.-'| Port: 3200

12 | `-._ `._ / _.-' | PID: 182161

13 `-._ `-._ `-./ _.-' _.-'

14 |`-._`-._ `-.__.-' _.-'_.-'|

15 | `-._`-._ _.-'_.-' | https://redis.io

16 `-._ `-._`-.__.-'_.-' _.-'

17 |`-._`-._ `-.__.-' _.-'_.-'|

18 | `-._`-._ _.-'_.-' |

19 `-._ `-._`-.__.-'_.-' _.-'

20 `-._ `-.__.-' _.-'

21 `-._ _.-'

22 `-.__.-'

23

24182161:M 31 Jan 2025 22:50:45.806 * Server initialized

25182161:M 31 Jan 2025 22:50:45.806 * Loading RDB produced by version 7.4.2

26182161:M 31 Jan 2025 22:50:45.806 * RDB age 105 seconds

27182161:M 31 Jan 2025 22:50:45.806 * RDB memory usage when created 16.70 Mb

28182161:M 31 Jan 2025 22:50:45.846 * Done loading RDB, keys loaded: 199983, keys expired: 0.

29182161:M 31 Jan 2025 22:50:45.846 * DB loaded from disk: 0.041 seconds

30182161:M 31 Jan 2025 22:50:45.846 * Ready to accept connections tcp

31...

And then, we can re-run the benchmark command above but for port 3200 since we

use that port to run the redis-server.

1❯ ./redis-benchmark -h localhost -p 3200 -r 100000000000 -c 50 -t SET,GET -q

2SET: 136612.02 requests per second, p50=0.183 msec

3GET: 122399.02 requests per second, p50=0.223 msec

As you can see, your performance is similar to the actual Redis. We don’t do any tricks here. What we’ve done is roughly the same as what Redis has done as well.

Redis can actually perform better than that. During benchmarking, there is

actually some overhead in the communication. Each time the client (in this case

the client is redis-benchmark) sends a command to Redis server, the command

needs to be copied from the client’s memory to kernel memory and copied again

from kernel memory to Redis server memory. There are a bunch of copying

operations between user space and kernel space here and it might affect the

performance. In order to reduce this overhead, we can use pipelined benchmark.

Without pipeline, in order to send N requests to the Redis server, the client

will need to send a command to Redis server and then wait for the response for

N times. This means there are roughly 2*N times copy performed from user

space to kernel space. By using pipeline, the client can send those N requests

at once and wait for N responses at once as well. By doing that, the number of

interactions between the user space and kernel will be minimized.

Again, note that most real-world applications don’t do pipelining. What we do here is not really depicting what will happen in a production environment. We do pipelining to make things more interesting and see how high the throughput we can get, and how low the latency we can get.

Let’s run the benchmark again for the actual Redis server with pipeline enabled.

1❯ ./redis-benchmark -h localhost -p 3200 -r 100000000000 -c 50 -t SET,GET -P 10000 -q

2SET: 2130160.75 requests per second, p50=3.079 msec

3GET: 2652540.00 requests per second, p50=14.255 msec

By adding -P 10000, we set the redis-benchmark to pipeline 10,000 requests

into a single message. As you can see, now we have higher throughput. Now we get

about 2.1 million SET operations per second and 2.6 million GET operations

per second. It’s almost 10x higher. But, you might notice that the latency is

increasing. The median of SET’s latency has now increased to 3ms from

previously only 0.23ms. This is the effect of pipelining. When we pipeline

requests together, some requests might end up being served a little bit longer.

Previously, to handle a request, you just needed to send the command to Redis

server and wait for the response. With pipelining, when you want to execute a

command, you don’t send the command directly, but you need to wait for the other

9,999 commands first to be sent together as a batch.

Let’s try benchmarking our Redis server written in Go using pipelining:

1❯ ./redis-benchmark -h localhost -p 3100 -r 100000000000 -c 50 -t SET,GET -P 10000 -q

2WARNING: Could not fetch server CONFIG

3SET: 103413.09 requests per second, p50=6.135 msec

4GET: 109966.45 requests per second, p50=5.279 msec

As you can see, the throughput doesn’t change that much. This makes sense because we don’t really have any special code to handle pipelined requests. As far as we know, the requests are coming one by one and we handle them one by one as well. There is no optimization we’ve done to handle pipelined requests. The latency is also increasing, which also makes sense due to the same reason the latency increased in the actual Redis server benchmark.

Now, instead of using redis-benchmark, we will benchmark our Redis server

implementation using Golang’s benchmarking framework. By writing our own

benchmark, we can have more flexible benchmarking logic. We can, for example,

simulate a load where the access pattern resembles the access pattern in our

actual production environment. We can also run profiler to trace our CPU and

memory usage. This is very useful to find our app’s bottleneck, which will help

us to build applications with better performance. For simplicity’s sake, our

benchmark will just use uniformly distributed keys for SET command, just like

redis-benchmark. We are not going to write benchmark test for GET command.

There is nothing special about the GET command, so it can be left as an

exercise for the reader.

To create a benchmark test, you just need to write a function with Benchmark

prefix, accept a *testing.B as its parameter, and return nothing. This is the

convention used by Golang’s testing framework. I’ll put the benchmark logic in

server_test.go:

1func BenchmarkRedisSet(b *testing.B) {

2 ...

3}

To do the benchmark, first we will initialize the server. Ideally, we want our benchmark code to be efficient so that it won’t affect the code we’re running. To reduce the TCP overhead, we are going to use sockfile instead of opening a socket. By using sockfile, the communication between the client and the server will not be using TCP, but it will be done internally in the kernel.

1const sockfilePath string = "/tmp/redisexperiment.sock"

2

3func BenchmarkRedisSet(b *testing.B) {

4 _ = os.Remove(sockfilePath)

5 listener, err := net.Listen("unix", sockfilePath)

6 if err != nil {

7 b.Errorf("cannot open sock file: %s", err.Error())

8 return

9 }

10 noopLogger := slog.New(slog.NewTextHandler(io.Discard, nil))

11 server := NewServer(listener, noopLogger)

12 go func() {

13 if err := server.Start(); err != nil {

14 b.Errorf("cannot start server: %s", err.Error())

15 }

16 }()

17

18 // you need to reset the timer here to make sure that

19 // the initialization code above won't be considered as

20 // part of the benchmark.

21 b.ResetTimer()

22 ...

23}

Now, we just need to spawn some goroutines that will simulate the client. One

goroutine will simulate a single client connection. Instead of spawning

goroutines manually, we can use b.RunParallel helper function provided by

Golang’s testing framework. We don’t spawn goroutines manually because using

b.RunParallel is quite handy. It provides you a way to distribute the load to

multiple goroutines efficiently. Suppose you want to hit your server with

100,000 requests. Now you need to make sure that the sum of the number of

requests sent by each one of your spawned goroutines should be 100,000.

b.RunParallel can help you with this. b.RunParallel gives you a parameter

*testing.PB that you can use to check if your goroutine should send a request

or not. Calling pb.Next() returns true if you need to send another request

to the server, and it will return false if you’ve sent 100,000 requests to the

server and you can stop now. Additionally, by using b.RunParallel you can

configure the number of goroutines that will be used without changing the code.

You can configure it from the command line when you run the benchmark. You can

also do all of those manually, but for most cases, it’s more convenient to use

b.RunParallel.

1func BenchmarkRedisSet(b *testing.B) {

2 ...

3 id := atomic.Int64{}

4 b.RunParallel(func(pb *testing.PB) {

5 // First, you need to create a connection to the server

6 client, err := net.Dial("unix", sockfilePath)

7 ...

8 randomizer := rand.New(rand.NewSource(id.Add(1)))

9 pipelineSize := 100

10 buff := make([]byte, 4096)

11 writeBuffer := bytes.Buffer{}

12 count := 0

13

14 // pb.Next() returns true if you still need to send a request

15 // to the server. When it returns false, it means you already

16 // reached your target and you can stop sending requests.

17 for pb.Next() {

18 // These lines generate SET command with randomized key and value.

19 // We put the command to a buffer called `writeBuffer`. After the buffer

20 // is big enough, we send it to the server in one go. That's how we

21 // pipeline the requests.

22 writeBuffer.WriteString("*3\r\n$3\r\nset\r\n$12\r\n")

23 for i := 0; i < 12; i++ {

24 writeBuffer.WriteByte(byte(randomizer.Int31()%96 + 32))

25 }

26 writeBuffer.WriteString("\r\n$12\r\n")

27 for i := 0; i < 12; i++ {

28 writeBuffer.WriteByte(byte(randomizer.Int31()%96 + 32))

29 }

30 writeBuffer.WriteString("\r\n")

31

32 // After reaching a certain threshold, we send the generated requests

33 // to the server and wait for the response. We don't do anything

34 // with the response other than read it.

35 count++

36 if count >= pipelineSize {

37 if _, err := writeBuffer.WriteTo(client); err != nil {

38 b.Errorf("cannot write to server: %s", err.Error())

39 return

40 }

41 if _, err := io.ReadFull(client, buff[:5*count]); err != nil {

42 b.Errorf("cannot read from server: %s", err.Error())

43 return

44 }

45 count = 0

46 }

47 }

48

49 if count > 0 {

50 if _, err := writeBuffer.WriteTo(client); err != nil {

51 b.Errorf("cannot write to server: %s", err.Error())

52 return

53 }

54 if _, err := io.ReadFull(client, buff[:5*count]); err != nil {

55 b.Errorf("cannot read from server: %s", err.Error())

56 return

57 }

58 count = 0

59 }

60 ...

61 })

62 ...

63}

Don’t forget to close the server after the test is finished.

1func BenchmarkRedisSet(b *testing.B) {

2 ...

3 b.StopTimer()

4 if err := server.Stop(); err != nil {

5 b.Errorf("cannot stop server: %s", err.Error())

6 return

7 }

8

9 // We can also add our own metrics to the benchmark report like this:

10 throughput := float64(b.N) / b.Elapsed().Seconds()

11 b.ReportMetric(throughput, "ops/sec")

12}

You can find the full benchmark code here.

To run the benchmark, you can execute this command:

1# -bench=BenchmarkRedisSet is used to specify which benchmark function you want to run

2# -benchmem is used to tell the framework that you want to benchmark the memory usage

3# -benchtime=10s is used to specify the duration of the benchmark

4# -run="^$" is saying that you don't want to run any unit test

5❯ go test . -v -bench=BenchmarkRedisSet -benchmem -benchtime=10s -run="^$"

6goos: linux

7goarch: amd64

8pkg: github.com/jauhararifin/goredis

9cpu: AMD Ryzen 9 7900X 12-Core Processor

10BenchmarkRedisSet

11BenchmarkRedisSet-24 9774513 1289 ns/op 776083 ops/sec 585 B/op 42 allocs/op

12PASS

13ok github.com/jauhararifin/goredis 14.199s

Once the benchmark finished, you can see the result. As you can see above, the

benchmark runs about 14 seconds, sending about 9.7 million requests. The

throughput of the system is roughly 776K requests per second and each request is

handled at 1,289 nanoseconds on average. Since we add -benchmem, the result

also shows the number of allocations we made for each request.

You might wonder, how come we are able to handle 776K requests per second when

running it from Golang’s benchmark framework, but we only get about 28K requests

per second on redis-benchmark? The latency also differs quite a lot; using

redis-benchmark shows that the latency is about 5ms, while using Golang’s

testing framework it’s about 1,289 nanoseconds. Well, for latency, they use

different metrics. redis-benchmark shows the median, but the Golang’s testing

framework shows the average. The difference is expected, but in our case, the

differences are huge, so it’s a little suspicious.

TCP Overhead

Let’s analyze what is happening here. How is benchmarking using Golang’s

framework outperforming redis-benchmark by a lot? In theory, they shouldn’t,

since they are practically doing the same thing. We can start questioning

ourselves about what is different between our benchmark vs redis-benchmark.

One difference is that our benchmark is using a unix sockfile instead of an

actual TCP connection. By using a sockfile, we reduce some overhead done by TCP.

Let’s try to use a TCP connection instead of a unix sockfile.

1func BenchmarkRedisSet(b *testing.B) {

2 ...

3 listener, err := net.Listen("tcp", "0.0.0.0:3900")

4 ...

5 b.RunParallel(func(pb *testing.PB) {

6 client, err := net.Dial("tcp", "localhost:3900")

7 ...

8 })

9}

Now, let’s run our benchmark again:

1❯ go test . -v -bench=BenchmarkRedisSet -benchmem -benchtime=10s -run="^$"

2goos: linux

3goarch: amd64

4pkg: github.com/jauhararifin/goredis

5cpu: AMD Ryzen 9 7900X 12-Core Processor

6BenchmarkRedisSet

7BenchmarkRedisSet-24 7970709 1620 ns/op 617151 ops/sec 615 B/op 42 allocs/op

8PASS

9ok github.com/jauhararifin/goredis 14.783s

As you can see, the throughput drops a little. Previously it was 776K ops/sec,

now it is 617K ops/sec. It drops about 20%. However, it’s still much higher than

the benchmark result from redis-benchmark. The redis-benchmark only reaches

about 28K ops/sec, about 20x smaller than our current benchmark. Let’s analyze

further.

Logging Overhead

The second thing that differentiates between our benchmark and redis-benchmark

is that we don’t log anything in our test. If you notice, in the benchmark logic

above, I initialize the logger like this:

1noopLogger := slog.New(slog.NewTextHandler(io.Discard, nil))

You see, I put io.Discard as the log destination. This means whenever we log

something, it will just be dropped and not written anywhere. This is not what we

did when benchmarking with redis-benchmark. If you notice, in our main

function, I initialize the logger by doing this:

1logger := slog.New(slog.NewTextHandler(os.Stderr, &slog.HandlerOptions{Level: slog.LevelDebug}))

When we benchmark using redis-benchmark, we use os.Stderr as the log

destination. And if you notice, every time we handle a SET and GET request,

we log its parameters. This means when we benchmark our code using

redis-benchmark, we add extra overhead by logging it, while we don’t have that

overhead in our benchmark code that uses Golang’s testing framework.

Let’s see how our benchmark performs if we also use os.Stderr to print our

log. We also use slog.LevelDebug as the log level so that our debug print will

be printed.

1noopLogger := slog.New(slog.NewTextHandler(io.Stderr, nil))

1❯ go test . -v -bench=BenchmarkRedisSet -benchmem -benchtime=10s -run="^$"

2...

3time=2025-02-03T04:01:09.250+07:00 level=DEBUG msg="SET key into value" key=z&k1:4QJIKHh value=PJNL-B4HTz@ clientId=14

4time=2025-02-03T04:01:09.250+07:00 level=INFO msg="closing client" clientId=14

5BenchmarkRedisSet-24 1434164 8348 ns/op 119787 ops/sec 601 B/op 43 allocs/op

6PASS

Now our benchmark result matches the one we did using redis-benchmark. We

still have different results for the latency, but this is expected because we

are reporting different metrics here. Here, the latency is the average latency

while redis-benchmark reports the median of the latency. Neither metric is a

good measure of latency, but we won’t be focusing on that too much for now.

I will keep using unix sockfile and turn off the logging for benchmarking

purposes. This is because we want to focus on Redis itself instead of the

overhead of TCP and logging. In the real world, we don’t usually enable debug

logs; we usually only log when a client is connected or disconnected. So,

disabling the log here makes more sense because it mimics what we’ll be doing in

a production environment. We also use sockfile instead of TCP connection here

because there is not much we can do with TCP connection. In the end, the

performance of TCP depends on many external factors as well, such as the cable

we are using, how far apart the client and server are, etc. There is technically

something we can do to reduce the overhead of TCP connection like using

io_uring, but I won’t

cover that in this article because it’s quite involved and this article is

supposed to be an introduction.

Profiling

To improve the performance of an application, you need to know where the bottleneck is. You need to identify what makes your app slow or has low throughput. You don’t want to improve your app on things that don’t matter. Applications consist of multiple parts working together. Sometimes, it’s not obvious which component we should improve. Improving a component that plays a little role in the whole application performance won’t give us meaningful performance improvement. For example, in our Redis server, there is logic to accept client connections, read their requests, process the requests, and send back the responses. Improving things like accepting client connection logic or shutdown logic won’t increase our throughput or reduce our latency.

The more complex your application, the harder it is to find the performance bottleneck. Fortunately, we can profile our code to find it. We will first focus on CPU profiling. There are other types of profiling like memory profiling, block profiling, mutex profiling, etc. Conceptually, what CPU profiling does is just sample our CPU periodically. When we run our benchmark and turn on the CPU profiler, Golang’s runtime will periodically snapshot our CPU position. It records what function our CPU is currently on (for each thread). So after the benchmark finishes, we will have a file containing the list of samples. Each sample basically is just a stacktrace of the function that our CPU is currently executing at that moment. The idea is: if there is a particular function that is sampled many times (compared to other functions), then this function must be the bottleneck. The more a function is sampled, the more our CPU is spending its time there. So, it makes sense that those functions could be the bottleneck of our application. This is not always the case, though. Some other types of applications might have bottlenecks on the network. When that happens, our CPU won’t do anything meaningful. If our app is slow because its internet speed is slow, then our CPU doesn’t actually do anything, and the result of CPU profiler won’t be meaningful.

Most programming languages have ways to help us profile our code. Profiling in

Golang is quite straightforward as well. You can add -cpuprofile=cpu.out to

enable CPU profiling during benchmark test. Once the benchmark is finished, you

will have a cpu.out file containing all the sampled CPU positions.

1❯ go test . -v -bench=BenchmarkRedisSet -benchmem -benchtime=10s -cpuprofile=cpu.out -run="^$"

2goos: linux

3goarch: amd64

4pkg: github.com/jauhararifin/goredis

5cpu: AMD Ryzen 9 7900X 12-Core Processor

6BenchmarkRedisSet

7BenchmarkRedisSet-24 8788383 1436 ns/op 696278 ops/sec 600 B/op 42 allocs/op

8PASS

9ok github.com/jauhararifin/goredis 14.241s

10❯ ls -l cpu.out

11-rw-rw-r-- 1 jauhararifin jauhararifin 52565 Feb 3 06:47 cpu.out

As you can see above, after you run the benchmark test, you will have a file

called cpu.out. This file contains the result of the CPU profiling. There are

a bunch of ways to analyze this file. Go itself has a tooling we can use to

analyze it, you can execute go tool pprof cpu.out to analyze it:

1❯ go tool pprof cpu.out

2File: goredis.test

3Type: cpu

4Time: Feb 3, 2025 at 6:50am (WIB)

5Duration: 12.97s, Total samples = 251.16s (1935.99%)

6Entering interactive mode (type "help" for commands, "o" for options)

7(pprof)

Once you run the above command, you can interact with the pprof tool to analyze

it. For example, you can write top 10 to find the top 10 functions that have

been sampled by CPU profiler.

1(pprof) top 10

2Showing nodes accounting for 196.87s, 78.38% of 251.16s total

3Dropped 298 nodes (cum <= 1.26s)

4Showing top 10 nodes out of 70

5 flat flat% sum% cum cum%

6 166.71s 66.38% 66.38% 166.71s 66.38% runtime/internal/syscall.Syscall6

7 5.21s 2.07% 68.45% 5.21s 2.07% runtime.(*timeHistogram).record

8 3.92s 1.56% 70.01% 3.92s 1.56% runtime.procyield

9 3.66s 1.46% 71.47% 4.68s 1.86% runtime.deductAssistCredit

10 3.52s 1.40% 72.87% 12.30s 4.90% runtime.exitsyscall

11 3.47s 1.38% 74.25% 164.78s 65.61% internal/poll.(*FD).Read

12 3.08s 1.23% 75.48% 136.93s 54.52% github.com/jauhararifin/goredis.readOne

13 3.07s 1.22% 76.70% 11.85s 4.72% runtime.mallocgc

14 2.16s 0.86% 77.56% 7.49s 2.98% runtime.casgstatus

15 2.07s 0.82% 78.38% 4.45s 1.77% runtime.reentersyscall

From the result above, you can see 10 lines of output, each line representing a

sampled function. You can see the first line is for the function

runtime/internal/syscall.Syscall6. This is the function used by Go’s runtime

to perform syscall. There are about

5 numbers on the left side of the function name. Those 5 numbers are basically

rooted in 2 sources, the flat and cum. The flat% is basically the same as

flat, but in percentage format. The same applies to cum and cum%.

flat represents how long your CPU spends its time inside that function. Higher

numbers mean that your CPU takes a lot of time executing that function. But, it

doesn’t count any function it called. So, if you have a function foo that

calls another function bar, the execution of bar won’t be counted in the

flat. For example, if let’s say you have a function that takes a slice of

integers and filters the prime numbers from that slice, you might write that

function like this:

1func filterPrime(s []int) []int {

2 result := make([]int, 0, len(s))

3 for _, x := range s {

4 if isPrime(x) {

5 result = append(result, x)

6 }

7 }

8 return result

9}

10

11func isPrime(x int) bool {

12 ...

13}

The execution of isPrime won’t be counted in the flat number. So if let’s

say your filterPrime takes 10 seconds to finish, but the isPrime itself is

executed for 8 seconds, your flat number on filterPrime will be 2 seconds.

The flat% is just the percentage of total CPU time. In the report above, you

see the first line says that you have 251.16 seconds of profile data. This is a

little bit misleading because you remember we only ran the benchmark test for

14.241 seconds. Well, this is because CPU time is not the same as wall-clock

time. CPU time measures the time used by CPU, not elapsed time. On my PC, I have

about 24 CPUs, which means each CPU takes about 10 seconds. Of course, this is

just an approximation; one CPU might execute more instructions than the others.

Ok, back to the flat%. You see the flat number in the first function is

166.71 seconds. If you divide it by the total profiling time (251.16 seconds),

you will get 66.38%, which is the number of flat%.

The sum% is basically just the sum of flat%. The value of sum% of the 5th

row is the sum of flat% at 1st, 2nd, 3rd, 4th, and 5th rows.

1 flat% sum%

2 +--66.38% 66.38% runtime/internal/syscall.Syscall6

3 | 2.07% 68.45% runtime.(*timeHistogram).record

4sum up -+ 1.56% 70.01% runtime.procyield

5to | 1.46% 71.47% runtime.deductAssistCredit

672.87% +---1.40% 72.87% runtime.exitsyscall

7 ^

8 |

9 +-- here 72.87% was the sum of 66.38% + 2.07% + 1.56% + 1.46% + 1.40%

Now, let’s talk about cum. cum is actually pretty straightforward. It’s the

cumulative sample from a function and any other function it called. Let’s use

the filterPrime function above. While flat shows how long the CPU spends its

time on that function only, cum shows how long the CPU spends its time on

that function plus any other function called by it. So, if our filterPrime

runs for 10 seconds, and the isPrime alone takes 8 seconds, then the cum

will be 10 seconds.

There are a bunch of other commands you can use other than top 10 to analyze

the profiling result. One of my favorites is the web command. If you run web

command, a browser will be opened showing the result in graphical format.

Similarly, each box in the graph represents a single function. Boxes with redder

color mean their cum number is bigger. The greyer color means its cum number

is smaller. You can see, each box has some numbers as well. For example, in

goredis.readObject box, there is 0.36s (0.14%) of 135.92s (54.12%), this shows

the flat and cum numbers. While the color shows the magnitude of cum

value, the font size shows the magnitude of flat value. So, bigger flat

numbers will be represented as bigger font.

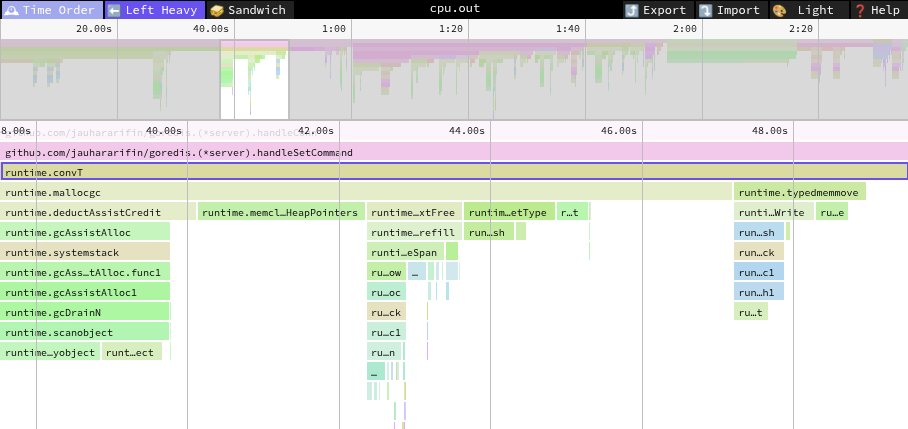

Now, apart from the box, if you use graphical representation, you will also see arrows pointing from one box to another box. These arrows represent function calls. Take a look at the picture below:

In the above picture, there are 3 arrows coming out of goredis.readObject, and

those arrows point to runtime.convTstring, goredis.readBulkString, and

goredis.readOne. This means that goredis.readObject calls those 3 functions.

A function might not be shown in the graph if it’s too small. So, be aware that

if you don’t see a function in the profiling graph, it doesn’t mean it’s not

being called.

Now, notice that each arrow has a text containing a duration. This duration

represents the time our CPU takes to execute the function called by

goredis.readObject. If you sum up the flat number of goredis.readObject

and the numbers of the arrows, you will get: 0.36s + 1.64s + 117.21s + 16.71s =

135.92s which is the same as the cum number of goredis.readObject.

The arrows also have different thicknesses and colors. Red arrows mean the CPU takes more time executing that path. You can think that the color represents the number of the arrow. Thick arrows mean that the CPU takes a lot of time executing that path.

Using graph representation can help us trace back the origin of our bottleneck.

We can see here that the syscall takes a lot of CPU time and it is coming

originally from readArray.

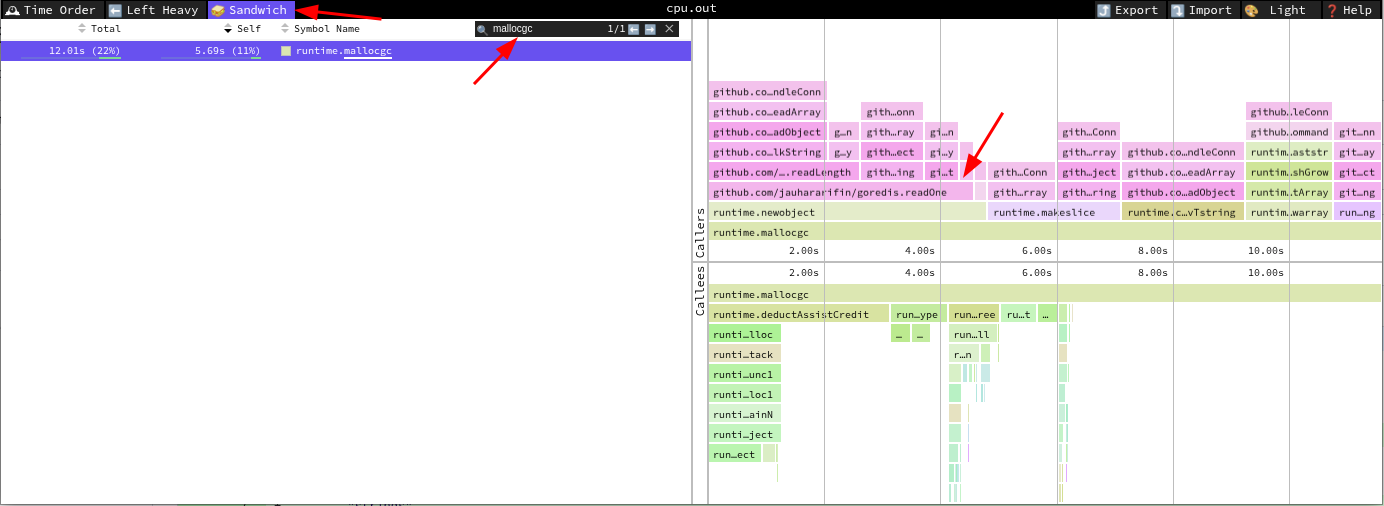

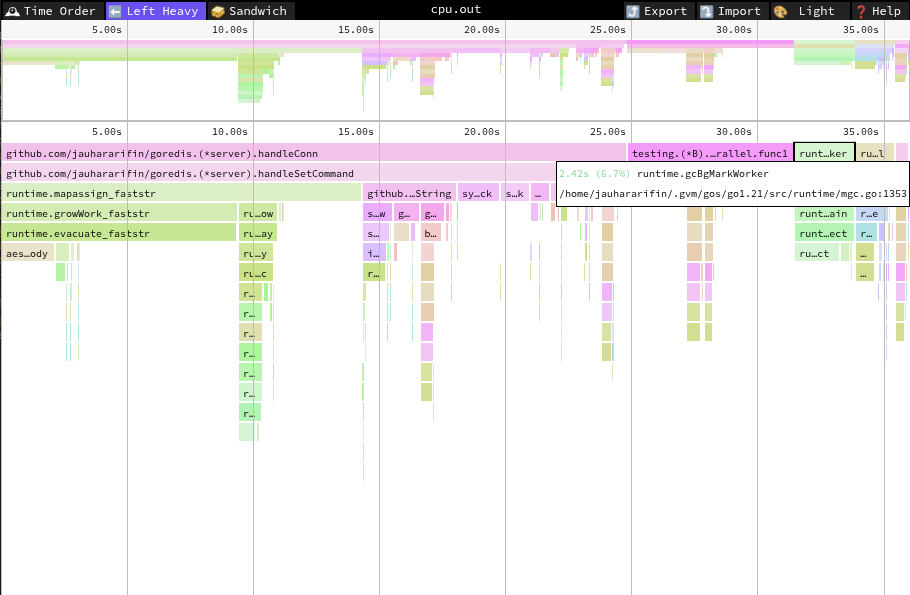

There is one more way you can analyze the CPU profiling result: you can use

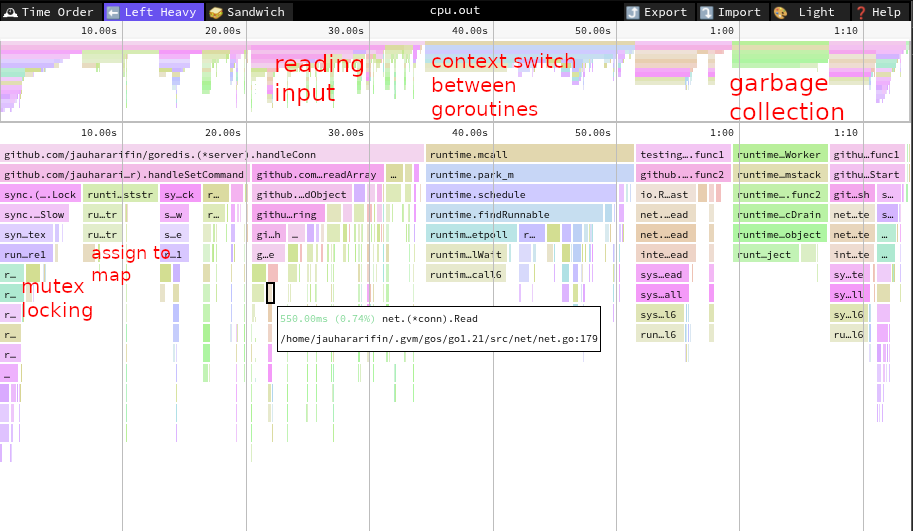

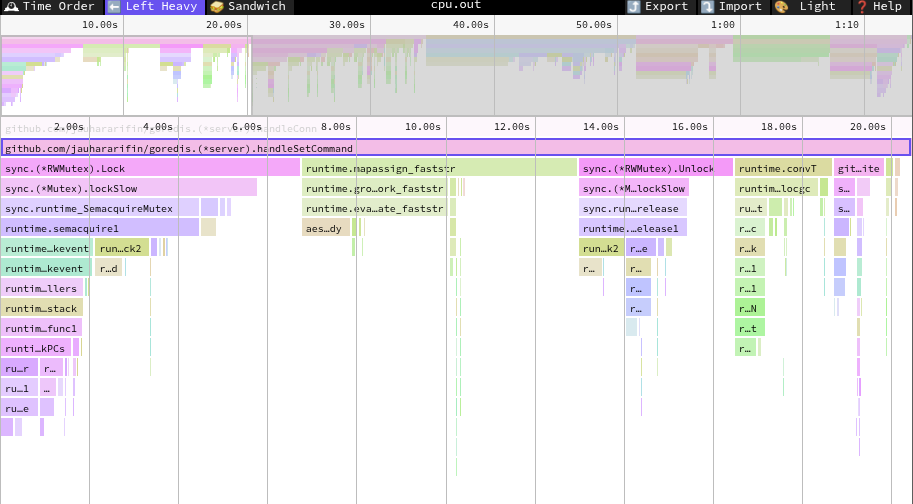

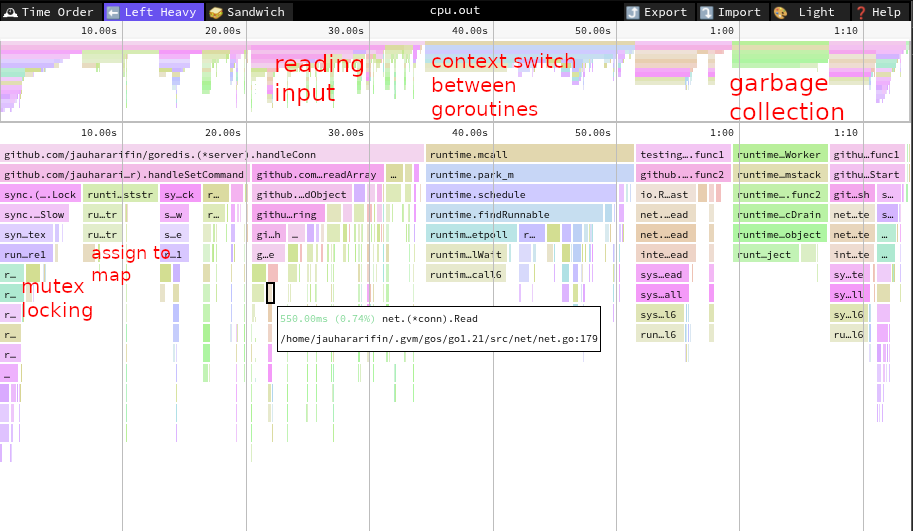

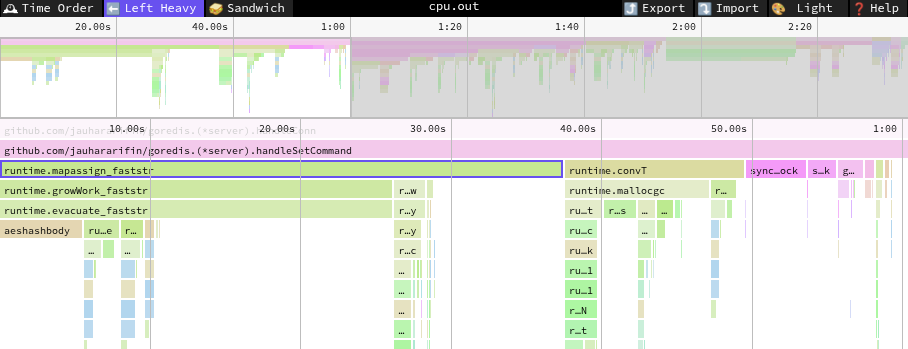

speedscope. You can drag and drop your cpu.out

file to this app and it will show you a flamegraph.

Similarly, each block in this graph represents a function. If you notice, the

blocks are stacked on top of each other. The stack represents a function call.

Wider blocks mean the CPU takes more time executing that block. You can also

find the cum and flat values in the bottom left corner of the graph.

Well, there are still other ways to visualize the CPU profiling result, but we will focus on the three methods above.

Syscall

Let’s go back to our CPU profile graph.

You will see that the box with the biggest font is syscall.Syscall6. This

indicates that our CPU spends a lot of time there. And if you look carefully,

this syscall originates from readArray, which calls readObject,

readBulkString, readLength, readOne, and then calls (*net.conn).Read.

So, the CPU spends a lot of time reading the client’s request and parsing it.

The parsing part is actually not that bad, judging from the flat value of

readArray, readObject, readBulkString, readLength, and readOne. All of

them executed less than 1% of the CPU time. The biggest contribution is actually

from syscall.Syscall6. What is this syscall.Syscall6?

You can think of a syscall as an

API for the kernel. Your computer most likely runs on an operating system. The

kernel is part of the operating system. It manages the resources in your

computer. CPU, memory, and storage are some of the resources it handles. It also

helps us talk to other hardware like monitors, printers, and keyboards through

drivers. Our application is like an isolated environment where we perform some

basic tasks like adding two numbers, looping, calling a function, etc. But when

it comes to allocating memory, showing something on the screen, listening to a

socket, or sending data to other processes, we can’t do those alone without the

kernel. Syscall is an API to talk to the kernel to do all of that. There are

syscalls for allocating memory, writing to a file, receiving bytes from other

processes, sending TCP packets through network interfaces, etc. In Go,

syscall.Syscall6 has this signature:

1func Syscall6(trap, a1, a2, a3, a4, a5, a6 uintptr) (r1, r2 uintptr, err Errno)

It accepts a bunch of integers and returns two integers and an error code. These numbers are the parameters of the syscall. One of the numbers might represent what kind of syscall we want to call. There is a code to call syscall to write a file, another code to allocate memory, etc. One of the parameters might also contain what file we want to read, or which address we want to write to, etc. We won’t go into too much detail on these parameters. You just need to know they’re there.

Now, the problem with syscalls is that they are quite slow. It won’t be that bad if you call syscalls fifty or a hundred times. But if you call them millions of times, the overhead adds up. Unlike an ordinary function call, syscalls have additional overhead. Your CPU needs to switch to kernel space, which means all of your application states need to be preserved during the syscall and restored after the syscall finishes. It also needs to switch into a higher privilege level in the CPU, and this has its own overhead too. Some syscalls like reading data from a socket also need to copy the data from kernel buffer to user buffer. There are other overheads that I don’t mention here, but the point is they have big overhead.

Buffered Reader

One trick to improve performance is by making expensive operations less

frequent. We can consider syscalls as expensive operations here. In our code,

when we parse the request from the client, we use the readOne helper function

that reads one byte from net.Conn. Reading a byte from net.Conn requires one

syscall. If we read 10 bytes, then we need 10 syscalls. This overhead adds up

and makes our application slower. To reduce the overhead, we can batch bytes

together. For example, instead of asking for one byte at a time, we read 1000

bytes at once using one syscall. We can store those 1000 bytes in a []byte in

our app. Whenever we need to read a byte, we just check our local []byte. If

it has a byte, then we read from it. But if it’s empty, we use syscall again to

read another 1000 bytes. By doing this, reading 2000 bytes doesn’t have to use

2000 syscalls; we can just use 2 syscalls. That sounds great, but why limit it

to 1000? Why don’t we just read 1 million bytes in one syscall? Well, smaller

batch sizes mean more syscalls, which means a lot of overhead. But bigger batch

sizes mean we need more memory to store it, and a single syscall might take

longer. If we use a very big batch size, the available bytes might not be able

to catch up to our consumption. We might read it faster than the client sends

it, which is not really a problem, but we will waste some memory. People often

use 4096 as the batch size. This is because that’s usually the minimum size of

the buffer that resides in the kernel. Also, it’s the size of a single memory

page, which means we can map it into a single entry in the

page table. I personally don’t have

a very strong opinion on this number. If you want to get the best number, you

can just experiment with it. If your server and client are in the same data

center, you might have more throughput with a bigger batch size because your

bandwidth is bigger.

Fortunately, Go has a really nice standard library that can help us with this.

We can use bufio.Reader to do this.

bufio.Reader does what I described above. It wraps an io.Reader and buffers

it. By default, bufio.Reader uses 4096 bytes to buffer the read bytes. To use

bufio.Reader, we can just call bufio.NewReader(...) and pass our net.Conn

(remember, net.Conn is an io.Reader as it implements the Read function).

1func (s *server) handleConn(clientId int64, conn net.Conn) {

2 ...

3 // We wrap the conn with bufio.NewReader.

4 // Now, every `Read` call will use the buffer provided by bufio.Reader

5 // until there are no more available bytes. Once the buffer is empty,

6 // it will call `conn.Read` to fill in the buffer again.

7 reader := bufio.NewReader(conn)

8 for {

9 // Now we use the wrapper connection instead of the original `conn`.

10 request, err := readArray(reader, true)

11 ...

12 }

13 ...

14}

That’s it. We just wrap the conn with bufio.Reader and it should be done.

You can check the code in

this commit.

Next, let’s run the benchmark again to see the result.

1❯ go test . -v -bench=BenchmarkRedisSet -benchmem -benchtime=10s -cpuprofile=cpu.out -run="^$"

2goos: linux

3goarch: amd64

4pkg: github.com/jauhararifin/goredis

5cpu: AMD Ryzen 9 7900X 12-Core Processor

6BenchmarkRedisSet

7BenchmarkRedisSet-24 11713911 903.7 ns/op 1106589 ops/sec 566 B/op 42 allocs/op

8PASS

9ok github.com/jauhararifin/goredis 11.868s

Now, our throughput is about 1.1 million SET operations per second. That’s a

40% improvement from the previous 776K SET operations per second.

If you check the benchmark result again, now readArray is not the bottleneck

anymore.

Premature Optimization: Buffered Writer

Remember, the key to improving your app’s performance is identifying your bottleneck first. Improving the performance in places that are not bottlenecks won’t give you much performance improvement. There is nothing wrong with improving things out of context if it’s a low-hanging fruit. Maybe you can improve your app performance by 1% by just adding one line of code; that’s fine. It’s not a difficult thing to do, so you might as well do it. But if the improvement is very complex and it is not the bottleneck, you might end up wasting a lot of time for just 1% of performance improvement.

On some occasions, improving your performance by 1% can matter, especially when you’re working on a very performance-critical system like a database or operating system.

Since our read is now buffered, we may think that it makes sense to buffer our write as well. When we send a response to the client, we can put it in a buffer first and send it only when the buffer is full. By doing this, we will have a smaller number of syscalls to send the response.

However, unlike making a buffered reader, making a buffered writer is not as

easy as wrapping our connection with

bufio.Writer. The way bufio.Writer works

is similar to bufio.Reader. It allocates a slice of bytes in our application

memory and writes the data there instead of directly to the underlying writer.

In our case, if we wrap our connection with bufio.Writer, we will write to

local bytes first and send it through the socket interface once the buffer is

full. This is not what we want. If we do it naively like this, there might be

some messages that will never be sent to the socket. Imagine our bufio.Writer

uses 4096 bytes for its buffer size. Now, let’s say there is a client sending

SET "key1" "value" to our server. As a result, our server needs to send back

OK. Now, instead of sending it through the socket, we write it to our local

buffer. Because OK is just 2 bytes (not 4096 or more), the bufio.Writer

won’t send it through the socket connection and we’re stuck here.

As an analogy, imagine you want to ride a roller coaster in a theme park. The roller coaster runs for about 5 minutes. To maximize utility, you want to wait until the roller coaster is full before you run it. This works pretty well when it’s crowded. But imagine when the theme park is not very crowded; there might be only 2 people who want to ride the roller coaster. In this case, the roller coaster will never be full and it will never run.

In order to solve the above problem, we need to have some timeout. Instead of waiting until the buffer is full, we need to limit our waiting time to some timeout, let’s say 10ms. So, if in 10ms the buffer is not full, we should send it anyway. This design, however, has some downsides. Now, for a response to be sent, it has to wait at most 10ms. If our traffic is high, our buffer might be full faster than 10ms and we get a lot of improvement there. But if our traffic is very low, a single request might take 10ms before the response itself is sent. This tradeoff is something you should be aware of before doing performance improvement. You should ask yourself whether it’s okay to increase the latency a little bit in order to get more throughput. In some cases, it might not be okay. In other cases, it might be okay.

There is a similar algorithm in computer networking called Nagle’s algorithm. Basically, when sending a packet through TCP, we can delay it first so we can send more packets. The details are a little bit different. It doesn’t use timeout as a fallback mechanism, but the idea is similar.

Let’s start by creating a buffered writer struct:

1type bufferWriter struct {

2 w io.Writer

3 buff []byte

4 n int

5}

6

7func newBufferWriter(w io.Writer) *bufferWriter {

8 return &bufferWriter{

9 w: w,

10 buff: make([]byte, 4096),

11 n: 0,

12 }

13}

Let’s continue implementing the Write method:

1func (b *bufferWriter) Write(buff []byte) (int, error) {

2 totalWrite := 0

3 for len(buff) > len(b.buff)-b.n {

4 var n int

5 if b.n == 0 {

6 n, _ = b.w.Write(buff)

7 } else {

8 n = copy(b.buff[b.n:], buff)

9 b.n += n

10 _ = b.flush()

11 }

12 totalWrite += n

13 buff = buff[n:]

14 }

15 n := copy(b.buff[b.n:], buff)

16 b.n += n

17 totalWrite += n

18 return totalWrite, nil

19}

20

21func (b *bufferWriter) flush() error {

22 panic("todo")

23}

In the above code, the logic is pretty straightforward. We are going to write

the data in the buff parameter to our buffer. If adding the buff to an

existing buffer will make the buffer full, we just put part of buff to our

buffer so that our buffer becomes full and then flush it. We have a special case

where our internal buffer is empty. In this case, we can just write the whole

buff directly. The rest of the code is straightforward; you just need to put

the remaining buff to the internal buffer and update the metadata.

Implementing the flush function is quite straightforward as well:

1func (b *bufferWriter) flush() error {

2 if b.n == 0 {

3 return nil

4 }

5

6 n, err := b.w.Write(b.buff[:b.n])

7 if n < b.n && err == nil {

8 err = io.ErrShortWrite

9 }

10 if err != nil {

11 if n > 0 && n < b.n {

12 copy(b.buff[0:b.n-n], b.buff[n:b.n])

13 }

14 b.n -= n

15 return err

16 }

17 b.n = 0

18 return nil

19}

To flush our internal buffer to the socket, we just need to call b.w.Write and

pass our buffer. This might work well if our underlying writer is a socket. But

let’s think bigger and assume that the internal writer can be any writer. When

we call b.w.Write, it returns an integer n indicating how many bytes are

written. This n is not guaranteed to be as big as the bytes we pass to

b.w.Write; it can be less. When this happens, we want to return an error,

io.ErrShortWrite to be precise, and update our internal buffer so that it only

includes the bytes that failed to be written. This is technically unnecessary

for our use case, but it’s nice to have more generic functions like this.

Now, so far we’ve implemented the buffered writer like bufio.Writer. We

haven’t added the timeout yet. We can spawn a separate goroutine that will flush

our buffer every 10ms. Note that I pick 10ms as the timeout out of thin air

here. You might wonder, why not 1ms or 20ms? Well, there is no particular reason

for that. You want the number to be big enough so that it can gather a lot of

bytes before it sends them to the socket. But it also needs to be small enough

so that your latency won’t be that bad.

One thing that you need to be careful about here is race conditions. Now because we spawn a new goroutine that flushes the buffer periodically, there is now a chance that two goroutines will access our local buffer concurrently. You need a lock to avoid this problem.

Periodically calling flush also has another problem. Now, your CPU needs to

wake up every 10ms and check whether there are bytes in the buffer that can be

sent through the socket. You might think that 10ms is not that bad, but actually

if you have 1000 clients, your CPU might need to wake up way more often than

10ms. So, instead of doing that, let’s make the CPU completely sleep when the

buffer is empty. Only when someone fills it with some bytes do we want to start

the 10ms timer to send the message. So, if nothing is written to the local

buffer, we just sleep endlessly. Once something is written to the local buffer,

we will wake up, wait for 10ms and send the buffer through the socket if the

buffer is not empty.

To implement the above idea, we can use the sync.Cond utility provided by

Golang. sync.Cond is almost like sync.Mutex. You can acquire a lock using

.Lock() just like sync.Mutex. You can also unlock it using .Unlock().

Additionally, it provides two more methods, which are .Wait() and .Signal().

Well, actually it also has one more method called Broadcast, which is almost

like Signal(), but we won’t be using that.

So, what does Wait() do? It’s a little bit complex, so buckle up. In order to

call Wait, you must first lock the sync.Cond. If you call Wait without

locking the sync.Cond, a panic will be raised. Now, calling Wait will unlock

the sync.Cond (remember, you need to lock the sync.Cond before calling

Wait), and pause the current goroutine until someone calls Signal or

Broadcast. So, once you call Wait, the lock will be released and you will be

sleeping until Signal is called to wake you up. Once you are woken up by

someone that calls Signal, you will acquire the lock of sync.Cond again.

Notice that before you call Wait(), you have the lock and you will have the

lock again after the Wait() returns. So, apparently, this property is very

useful to wait for some condition. That’s why it’s called Cond and the method

is called Wait.